I’ve been using GoHugo (Hugo) as a static site generator on all of my sites for about three years now and I love it’s speed and its flexibility. That said a recent policy change at a VPS host had me reassessing my options and now that I have my own Synology with Docker capability I was looking for a way to go ultra-slim and run my own builder, using a lightweight (read VERY low spec) OpenVZ VPS as the Nginx front-end web server behind a CDN like CloudFlare. Previously I’d used Netlify but their rebuild limitations on the free tier were getting a touch much.

I regularly create content that I want to set to release automatically in the future at a set time and date. In order to accomplish this Hugo needs to rebuild the site periodically in the background such that when new pages are ready to go live, they are automatically built and available to the world to see. When I’m debugging or writing articles I’ll run the local environment on my Macbook Pro and only when I’m happy with the final result will I push to the Git repo. Hence I need a set-and-forget automatic build environment. I’ve done this on spare machines (of which I current have none), on a beefier VPS using CronJobs and scripts, on my Synology as a Virtual machine using the same (wasn’t reliable) before settling on this design.

Requirements

The VPS needed to be capable of serving Nginx from folders that are RSync’d from the DropBox. I searched through LowEnd Stock looking for deals for 256GB of RAM, SSD for a cheap annual rate and at the time got the “Special Mini Sailor OpenVZ SSD” for $6 USD/yr which was that amount of RAM and 10GB of SSD space, running CentOS7. (Note: These have sold out but there’s plenty of others around that price range at time of writing)

Setting up the RSync, NGinx, SSH etc is beyond the scope of this article however it is relatively straight-forward. Some guides here might be helpful if you’re interested.

My sites are controlled via a Git workflow, which is quite common for website management of static sites and in my case I’ve used GitHub, GitLab and most recently settled on the lightweight and solid Gitea which I also self-host now on my Synology. Any of the above would work fine but having them on the same device makes the Git Clone very fast but you can adjust that step if you’re using an external hosting platform.

I also had three sites I wanted to build from the same platform. The requirements roughly were:

- Must stay within Synology DSM Docker environment (no hacking, no portainer which means DroneCI is out)

- Must use all self-hosted, owned docker/system environment

- A single docker image to build multiple websites

- Support error logging and notifications on build errors

- Must be lightweight

- Must be an updated/recent/current docker image of Hugo

The Docker Image And Folders

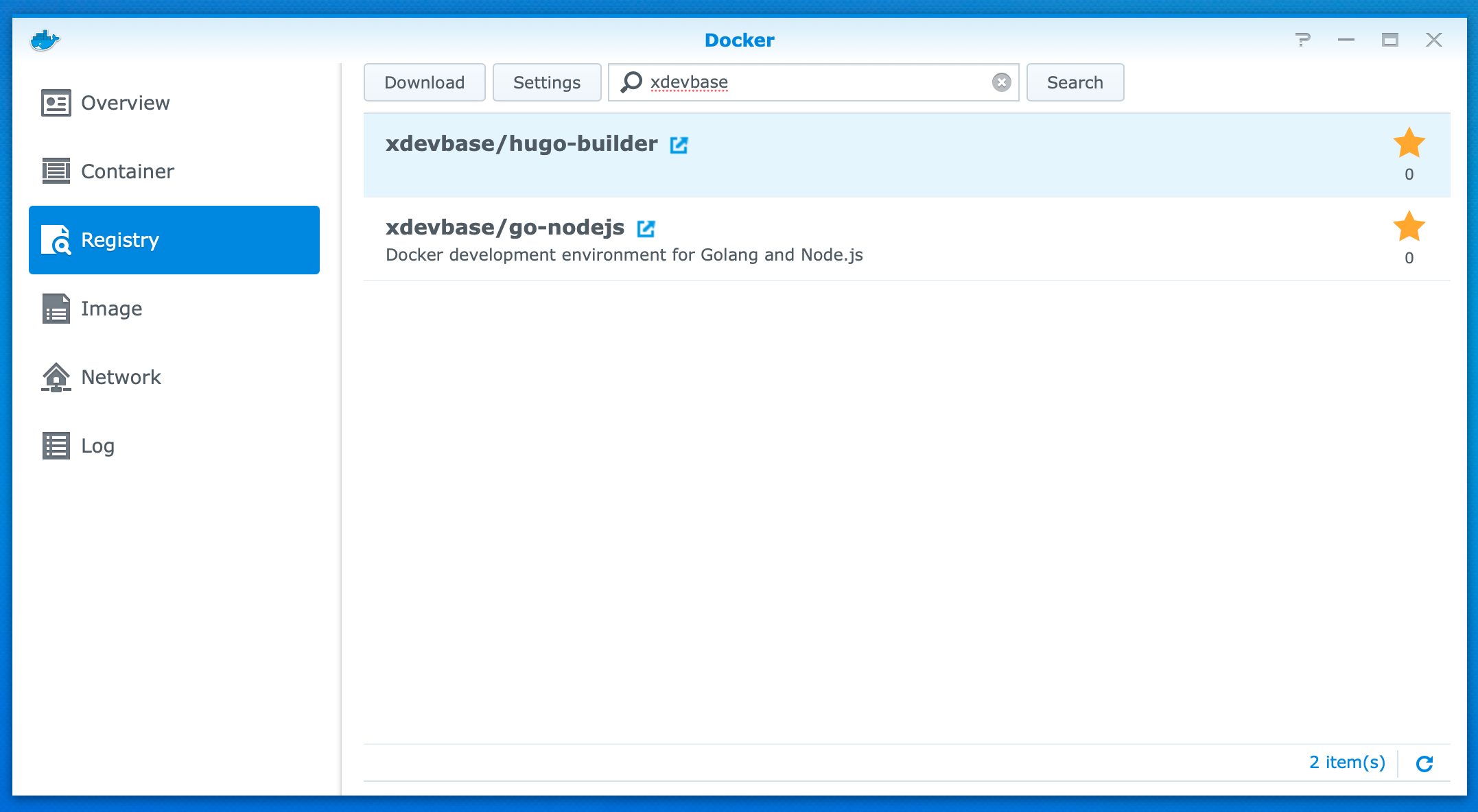

I struggled for a while with different images because I needed one that included RSync, Git, Hugo and allowed me to modify the startup script. Some of the hugo build dockers out there were actually quite restricted to a set workflow like running up the local server to serve from memory or assumed you had a single website. The XdevBase / HugoBuilder was perfect for what I needed. Preinstalled it has:

- rsync

- git

- Hugo (Obviously)

Search for “xdevbase” in the Docker Registry and you should find it. Select it and Download the latest - at time of writing it’s very lightweight only taking up 84MB.

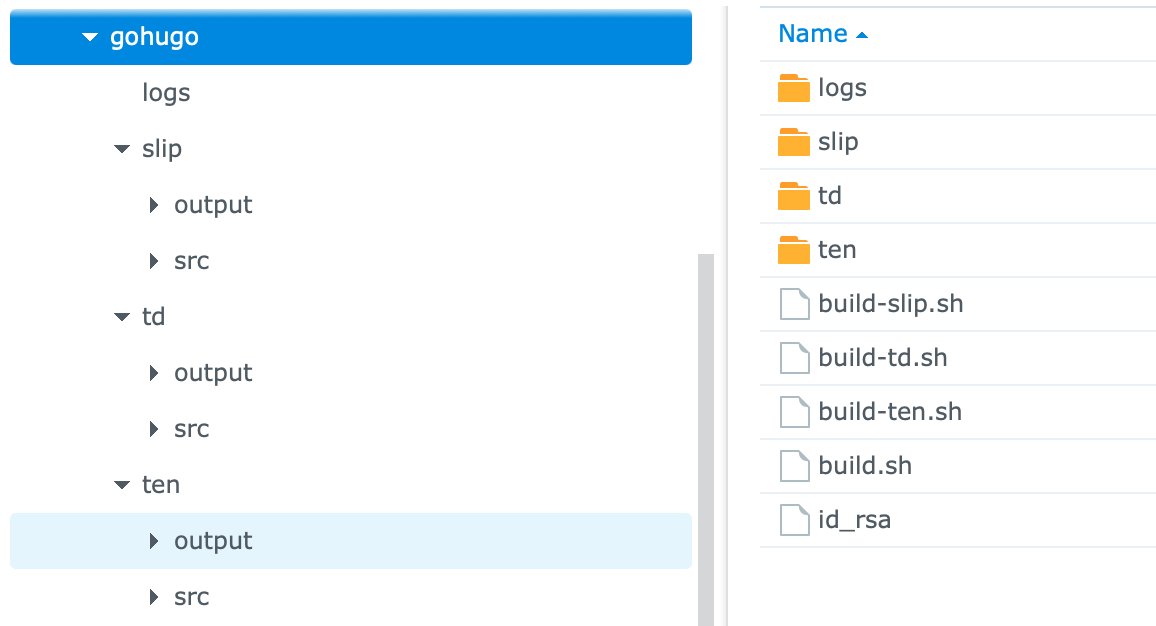

After this open “File Station” and start building the supporting folder structure you’ll need. For me I had three websites: TechDistortion, The Engineered Network and SlipApps, hence I created three folders. Firstly under the Docker folder which you should already have if you’ve played with Synology docker before, create a sub-folder for Hugo - for me I imaginatively called mine “gohugo”, then under that I created a sub-folder for each site plus one for my logs.

Under each website folder I also created two more folders: “src” for the website source I’ll be checking out of Gitea, and “output” for the final publicly generated Hugo website output from the generator.

Scripts

I spent a fair amount of time perfecting the scripts below. The idea was to have an over-arching script that called each site one after the other in a never-ending loop with a mandatory wait-time between the loops. If you attempt to run independent dockers each on a timer and any other task runs on the Synology, the two or three independently running dockers will overlap leading to an overload condition the Synology will not recover from. The only viable option is to serialise the builds and synchronising those builds is easiest using a single docker like I have.

Using the “Text Editor” on the Synology or using your text editor of choice and copying the files across to the correct folder, create a main build.sh file and as many build-xyz.sh files as you have sites you want to build.

#!/bin/sh

# Main build.sh

# Stash the current time and date in the log file and note the start of the docker

current_time=$(date)

echo "$current_time :: GoHugo Docker Startup" >> /root/logs/main-build-log.txt

while :

do

current_time=$(date)

echo "$current_time :: TEN Build Called" >> /root/logs/main-build-log.txt

/root/build-ten.sh

current_time=$(date)

echo "$current_time :: TEN Build Complete, Sleeping" >> /root/logs/main-build-log.txt

sleep 5m

current_time=$(date)

echo "$current_time :: TD Build Called" >> /root/logs/main-build-log.txt

/root/build-td.sh

current_time=$(date)

echo "$current_time :: TD Build Complete, Sleeping" >> /root/logs/main-build-log.txt

sleep 5m

current_time=$(date)

echo "$current_time :: SLIP Build Called" >> /root/logs/main-build-log.txt

/root/build-slip.sh

current_time=$(date)

echo "$current_time :: SLIP Build Complete, Sleeping" >> /root/logs/main-build-log.txt

sleep 5m

done

current_time=$(date)

echo "$current_time :: GoHugo Docker Build Loop Ungraceful Exit" >> /root/logs/main-build-log.txt

curl -s -F "token=xxxthisisatokenxxx" -F "user=xxxthisisauserxxx1" -F "title=Hugo Site Builds" -F "message=\"Ungraceful Exit from Build Loop\"" https://api.pushover.net/1/messages.json

# When debugging is handy to jump out into the Shell, but once it's working okay, comment this out:

#sh

This will create a main build log file and calls each sub-script in sequence. If it ever jumps out of the loop, I’ve set up a Pushover API notification to let me know.

Since all three sub-scripts are effectively identical except for the directories and repositories for each, The Engineered Network script follows:

#!/bin/sh

# BUILD The Engineered Network website: build-ten.sh

# Set Time Stamp of this build

current_time=$(date)

echo "$current_time :: TEN Build Started" >> /root/logs/ten-build-log.txt

rm -rf /ten/src/* /ten/src/.* 2> /dev/null

current_time=$(date)

if [[ -z "$(ls -A /ten/src)" ]];

then

echo "$current_time :: Repository (TEN) successfully cleared." >> /root/logs/ten-build-log.txt

else

echo "$current_time :: Repository (TEN) not cleared." >> /root/logs/ten-build-log.txt

fi

# The following is easy since my Gitea repos are on the same device. You could also set this up to Clone from an external repo.

git --git-dir /ten/src/ clone /repos/engineered.git /ten/src/ --quiet

success=$?

current_time=$(date)

if [[ $success -eq 0 ]];

then

echo "$current_time :: Repository (TEN) successfully cloned." >> /root/logs/ten-build-log.txt

else

echo "$current_time :: Repository (TEN) not cloned." >> /root/logs/ten-build-log.txt

fi

rm -rf /ten/output/* /ten/output/.* 2> /dev/null

current_time=$(date)

if [[ -z "$(ls -A /ten/output)" ]];

then

echo "$current_time :: Site (TEN) successfully cleared." >> /root/logs/ten-build-log.txt

else

echo "$current_time :: Site (TEN) not cleared." >> /root/logs/ten-build-log.txt

fi

hugo -s /ten/src/ -d /ten/output/ -b "https://engineered.network" --quiet

success=$?

current_time=$(date)

if [[ $success -eq 0 ]];

then

echo "$current_time :: Site (TEN) successfully generated." >> /root/logs/ten-build-log.txt

else

echo "$current_time :: Site (TEN) not generated." >> /root/logs/ten-build-log.txt

fi

rsync -arvz --quiet -e 'ssh -p 22' --delete /ten/output/ bobtheuser@myhostsailorvps:/var/www/html/engineered

success=$?

current_time=$(date)

if [[ $success -eq 0 ]];

then

echo "$current_time :: Site (TEN) successfully synchronised." >> /root/logs/ten-build-log.txt

else

echo "$current_time :: Site (TEN) not synchronised." >> /root/logs/ten-build-log.txt

fi

current_time=$(date)

echo "$current_time :: TEN Build Ended" >> /root/logs/ten-build-log.txt

The above script can be broken down into several steps as follows:

- Clear the Hugo Source directory

- Pull the current released Source code from the Git repo

- Clear the Hugo Output directory

- Hugo generate the Output of the website

- RSync the output to the remote VPS

Each step has a pass/fail check and logs the result either way.

Your SSH Key

For this work you need to confirm that RSync works and you can push to the remote VPS securely. For that extract the id_rsa key (preferably generate a fresh key-pair) and place that in the /docker/gohugo/ folder on the Synology ready for the next step. As they say it should “just work” but you can test if it does once your docker is running. Open the GoHugo docker, go to the Terminal tab and Create–>Launch with command “sh” then select the “sh” terminal window. In there enter:

ssh bobtheuser@myhostsailorvps -p22

That should log you in without a password, securely via ssh. Once it’s working you can exit that terminal and smile. If not, you’ll need to dig into the SSH keys which is beyond the scope of this article.

Gitea Repo

This is now specific to my use case. You could also clone your Repo from any other location but for me this was quicker easier and simpler to map my repo from the Gitea Docker folder location. If you’re like me and running your own Gitea on the Synology you’ll find that repo directory under the /docker/gitea sub-directories at …data/git/respositories/ and that’s it. Of course many will not be doing that, but setting up external Git cloning isn’t too difficult but beyond the scope of this article.

Configuring The Docker Container

Under the Docker –> Image section, select the downloaded image then “Launch” it, set the Container Name to “gohugo” (or whatever name you want…doesn’t matter) then configure the Advanced Settings as follows:

- Enable auto-restart: Checked

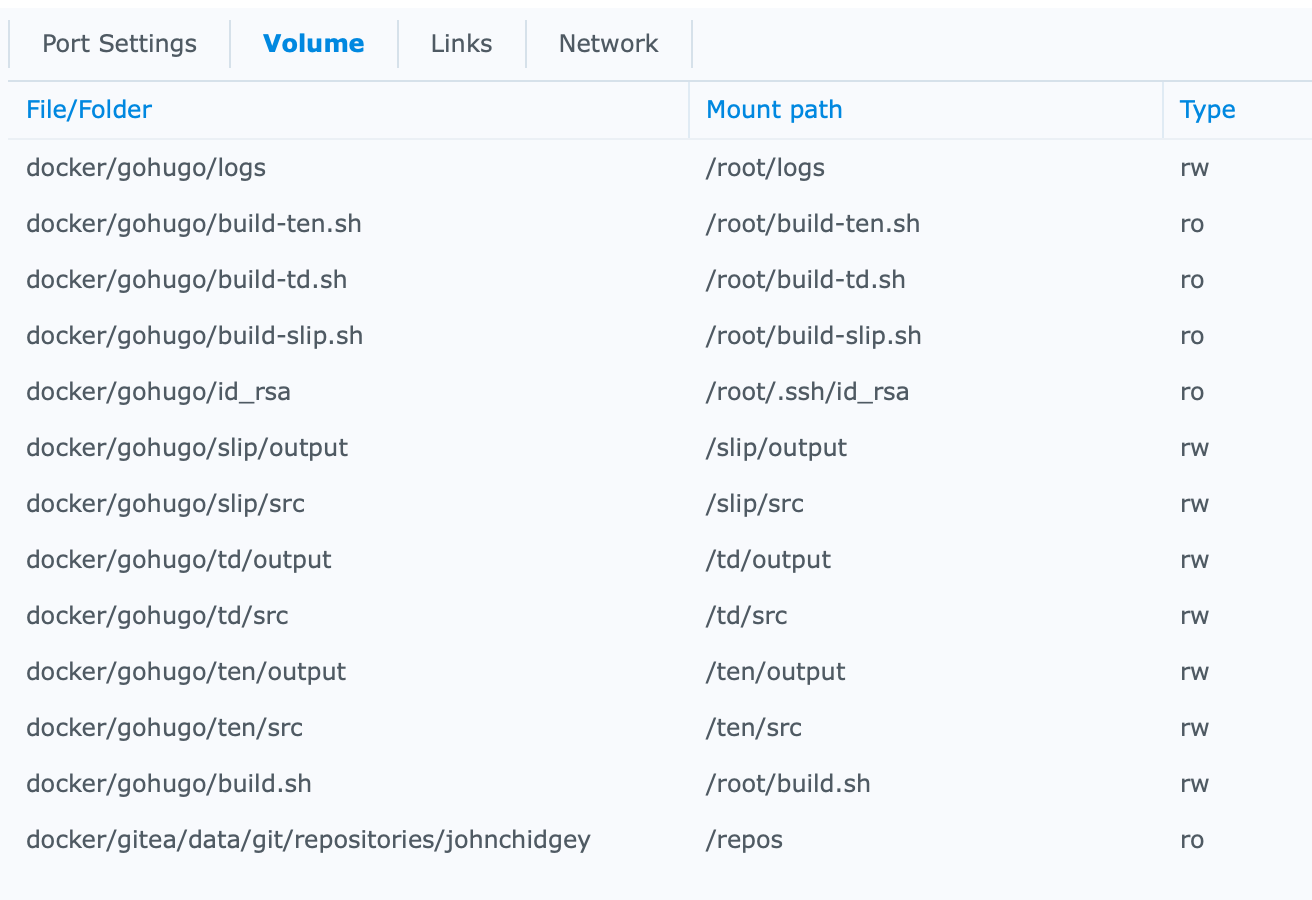

- Volume: (See below)

- Network: Leave it as bridge is fine

- Port Settings: Since I’m using this as a builder I don’t care about web-server functionality so I left this at Auto and never use that feature

- Links: Leave this empty

- Environment –> Command: /root/build.sh (Really important to set this start-up command here and now, since thanks to Synology’s DSM Docker implementation, you can’t change this after the Docker container has been created without destroying and recreating the entire docker container!)

There’s a lot of little things to add here to make this work for all the sites. In future if you want to add more sites then stopping the Docker, adding Folders and modifying the scripts is straight-forward.

Add the following Files: (Where xxx, yyy, zzz are the script names representing your sites we created above, aaa is your local repo folder name)

- docker/gohugo/build-xxx.sh map to /root/build-xxx.sh (Read-Only)

- docker/gohugo/build-yyy.sh map to /root/build-yyy.sh (Read-Only)

- docker/gohugo/build-zzz.sh map to /root/build-zzz.sh (Read-Only)

- docker/gohugo/build.sh map to /root/build.sh

- docker/gohugo/id_rsa map to /root/.ssh/id_rsa (Read-Only)

- docker/gitea/data/git/respositories/aaa map to /repos (Read-Only) Only for a locally hosted Gitea repo

Add the following Folders:

- docker/gohugo/xxx/output map to /xxx/output

- docker/gohugo/xxx/src map to /xxx/src

- docker/gohugo/yyy/output map to /yyy/output

- docker/gohugo/yyy/src map to /yyy/src

- docker/gohugo/zzz/output map to /zzz/output

- docker/gohugo/zzz/src map to /zzz/src

- docker/gohugo/logs map to /root/logs

When finished and fully built the Volumes will look something like this:

Apply the Advanced Settings then Next and select “Run this container after the wizard is finished” then Apply and away we go.

Of course, you can put whatever folder structure and naming you like, but I like keeping my abbreviations consistent and brief for easier coding and fault-finding. Feel free to use artistic licence as you please…

Away We Go!

At this point the Docker should now be periodically regenerating your Hugo websites like clockwork. I’ve had this setup running now for many weeks without a single hiccup and on rebooting it comes back to life and just picks up and runs without any issues.

As a final bonus you can also configure the Synology Web Server to point at each Output directory and double-check what’s being posted live if you want to.

Enjoy your automated Hugo build environment that you completely control :)